When building AI tools for compliance or security work, you might quickly run into a problem: copy-paste fatigue.

LLMs can help users with internal processes, compliance tasks, and questions about policies, but there’s friction. Users first have to first pull data from their tools, copy it over to the LLM, and then complete their task.

If you do this once or twice, it works well enough. When you’re doing it every day, however, that friction adds up. Users either stop updating the data they’re feeding into the LLM, or they stop using the tool altogether. The result is stale data, hallucinated answers that aren’t anchored to the truth, and no real efficiency gains.

The solution is to build AI tools that stay grounded in your real environment. Instead of forcing users to copy and paste data, the system already knows the current state. It pulls live information, applies organizational context, and keeps responses anchored to what is true and relevant right now.

With that foundation, a user can just ask a question or start the task at hand. The system retrieves the relevant policies, parameters, and live operational data, then returns an answer tailored to their environment.

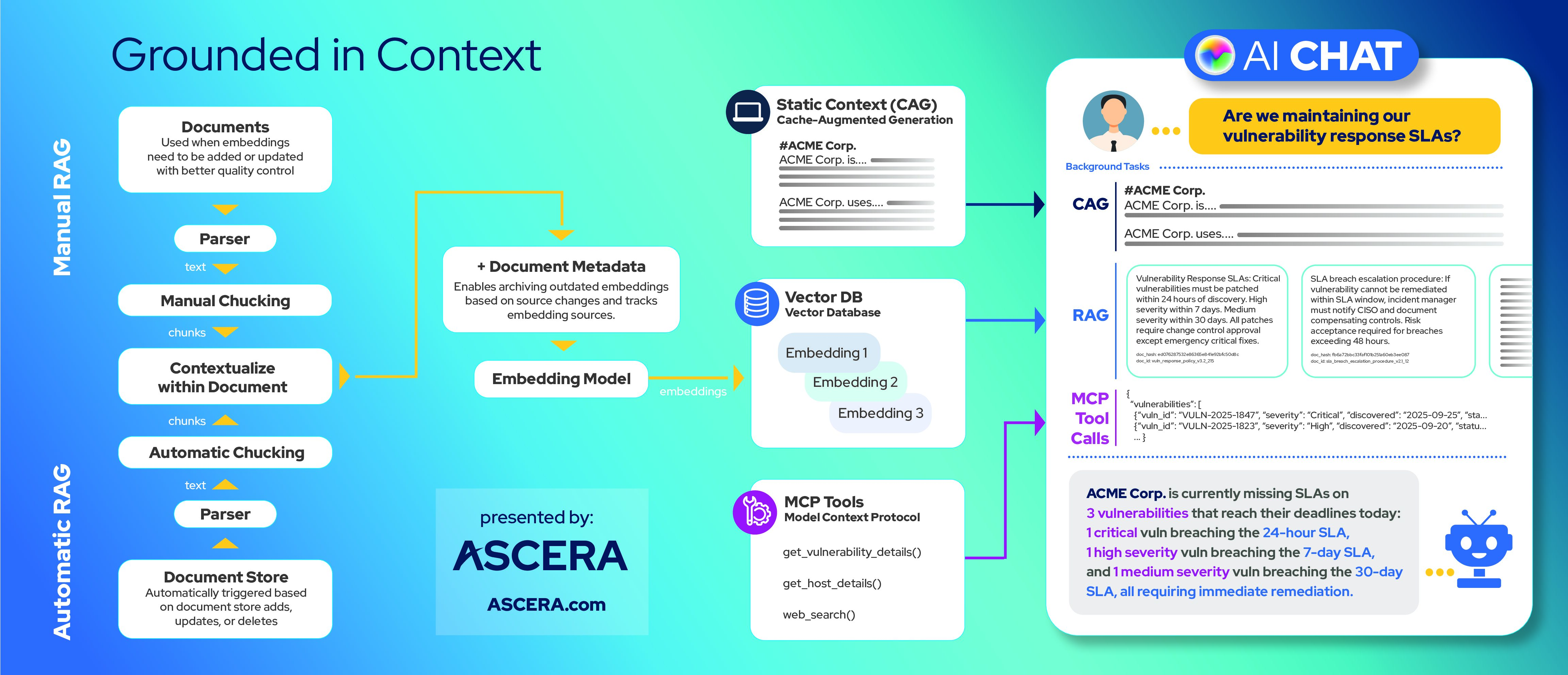

The rest of this article breaks down the architecture required to make that possible. The diagram below provides a glimpse at the complete architecture this article will explore.

Looking for a higher-level overview of using AI for cyber compliance including safe practices, risks, benefits, and use cases? Read this blog.

What is RAG?

RAG stands for Retrieval Augmented Generation. It’s the primary technique used to reduce hallucinations and ground LLMs in real data.

Here’s how it works at a high level:

- Take your documents and break them into chunks

- Send those chunks through an embedding model, which creates a mathematical representation called a vector or embedding

- Store these embeddings in a vector database

- When a user asks a question, convert that question into an embedding using the same embedding model

- Perform a similarity search to find which embeddings in your database are closely related to the question

- Return those embeddings as context to the LLM

Think of embeddings like flashcards. Each chunk of your document becomes a flashcard, and when you ask a question, the system finds the flashcards that are most relevant to that question and shows them to the LLM. The LLM uses that context to generate a more accurate and grounded response.

It’s important to note that chunking methods and similarity search techniques are just simple examples here. There are many more sophisticated techniques you can implement to improve this process, including semantic chunking, hierarchical retrieval, hybrid search, and reranking strategies. We won’t be covering these topics in this article, but they are great topics to explore as you refine your RAG pipeline.

Manual RAG vs. Automatic RAG

There are two approaches to building your RAG pipeline, depending on your data and use case.

Manual RAG

Use manual RAG when you have a smaller set of somewhat structured data, and, most importantly, the quality of how it’s chunked is critical.

For example, consider a large policy document that’s well-structured into sections. You want to ensure that each chunk contains a complete thought or section. Rather than using an automatic chunking method that might break up sentences or paragraphs arbitrarily, you manually review and chunk the document. You may use a systematic approach to chunk the document, but the human is in the loop to ensure the quality of the chunks.

Automatic RAG

Use automatic RAG when you have a large volume of data with varying structures, and you need to ensure all that data is available for search.

For example, if you have a document store full of policy documents that are constantly being updated, you can establish a chunking method that works across all of them. You might chunk at every paragraph, or after every 100 words with some overlap between chunks.

The point is that you don’t need someone to manually review every document and re-chunk it every time it updates. You establish a chunking method that works well, automate it, and let it run. There are several chunking techniques you can use depending on what works best for your documents.

Hybrid Approach

In practice, many organizations use a hybrid approach, applying manual RAG to critical policy documents where precision matters and automatic RAG to larger document repositories where coverage is more important than perfect chunking.

Contextualization

In this step, we ensure that the chunks are contextualized within the document they are part of. This helps to ensure chunks are not isolated pieces of text but are understood in relation to the whole document.

For instance, you may have a chunk detailing information about a retention policy without explicitly mentioning that it relates to your SIEM. Here is some information you could add using the help of an LLM to ensure the chunk is well contextualized within the document:

- The year the document was published

- The name of the document

- What section the chunk appeared in

By adding contextual information about the original document, you help the similarity search process return more accurate results and also help the LLM understand the relevance of that chunk.

Adding Metadata

Similar to contextualization, adding metadata to your chunks helps manage them in a more systematic way. Here are some examples of metadata you might add:

- Page number the chunk came from

- Filename

- Hash of the document

Some of this metadata is useful for auditability and traceability, while other metadata helps manage the lifecycle of your embeddings. For example, storing a hash of the document allows you to detect when a document has changed. You can then use that information to update or archive embeddings as needed.

Embedding

At this stage, the original documents have been chunked, contextualized, and enriched with metadata. Now it’s time to convert those chunks into embeddings.

An embedding model is used to transform each chunk of text into a vector representation. The embedding model processes the text and generates a high-dimensional vector that captures the semantic meaning of the text. This vector is called an embedding. An embedding may look something like this:

[0.11, -0.35, 0.13, 0.41…, 0.71]

In this high-dimensional space, embeddings that are semantically similar will be located closer together. This allows us to perform similarity searches later on.

CAG, RAG, and MCP

RAG is just one piece of the puzzle. There are several other tools and techniques you can use to further enhance the LLM’s ability to provide accurate and relevant responses. Here are some additions that can be used at inference time by the LLM to enhance its capabilities and reduce hallucinations:

CAG (Cache/Context Augmented Generation)

CAG provides general context about your organization that gets injected into every conversation automatically. This might include information such as:

- Your company name

- The industry you operate in

- The tools you use (SIEM, vulnerability scanner, etc.)

- Your compliance frameworks

Users don’t have to repeat this context every time. It’s present in the background, helping anchor the LLM’s responses to your specific environment.

RAG (Retrieval Augmented Generation) – Vector Based

We’ve already covered RAG in detail, but to reiterate, RAG serves as your knowledge database. Now that we have our knowledge base built into a vector database, here’s a simple implementation of how RAG works at inference time:

- The user asks a question

- The question is converted into an embedding using the same embedding model

- The embedding of the question is used to search the vector database for similar embeddings

- The identified embeddings are returned as context to the LLM

MCP (Model Context Protocol)

MCP is a common protocol that expands the LLM’s capabilities by giving it access to tools. However, MCP isn’t the only way to achieve this. It can be any form of function calling or tool calling. MCP is just one implementation.

The key concept is that you’re giving the LLM the ability to fetch live data on its own. For example:

- Query your vulnerability scanner for current vulnerabilities

- Check your SIEM for recent alerts

- Pull data from your ticketing system

Depending on the capability of the tool being queried, the LLM might even construct a custom query. For instance, after RAG provides the SLA ranges, the LLM could build a query to look for tickets that fall outside of those ranges.

This is how you integrate real-time data into the LLM’s responses without having to manually provide it.

Putting It All Together: A Real Example

Let’s walk through a real query to see how all three tools work together.

User asks: “Are we maintaining our vulnerability response SLAs?”

This is a question you can’t simply ask a public LLM without first uploading substantial context. But with a properly built RAG pipeline and integrations, the LLM can answer this question accurately and in real-time.

Step 1: CAG provides background context

The LLM already knows from CAG that:

- Your company is ACME Corp.

- You use a specific vulnerability scanner

- You operate in a regulated industry with compliance requirements

Step 2: RAG retrieves relevant knowledge

The system converts your question into an embedding and searches the vector database. It might return embeddings such as:

- A chunk from your vulnerability management policy detailing how SLAs are defined and measured

- A check on your escalation process when SLAs are breached

All of this gets added as context to the LLM automatically.

Step 3: MCP fetches live data

Now the LLM knows what your SLAs are from RAG, but it needs current vulnerability data. So it makes a tool call to your vulnerability scanner:

get_vulnerability_details()

This returns live data about all your current vulnerabilities, including their severity and how long they’ve been open. The LLM might even construct a more advanced query based on the SLA ranges it learned from RAG, specifically looking for vulnerabilities that exceed those thresholds.

Step 4: LLM generates the response

Now the LLM has everything it needs:

- Company context (CAG)

- SLA definitions (RAG)

- Current vulnerability data (MCP)

It can generate a response such as:

“ACME Corp. is currently missing SLAs on 3 vulnerabilities that reach their deadlines today: 1 critical vulnerability breaching the 24-hour SLA, 1 high severity vulnerability breaching the 7-day SLA, and 1 medium severity vulnerability breaching the 30-day SLA, all requiring immediate remediation.”

Managing Embedding Lifecycle

One challenge with RAG is keeping your knowledge base current. Here’s how to handle it:

When a document changes:

- Detect the change (via file hash or monitoring your document store)

- Query all embeddings that came from the old document

- Set their status to “archived” to invalidate them

- Create new chunks from the updated document

- Generate new embeddings and add them to the database

This approach ensures that normal queries only return current embeddings. The responses will be based on the latest information.

If a query requires historical context (e.g., “When did our vulnerability SLAs change?”), you can include archived embeddings in the search to provide the historical perspective.

Additional logic may be needed to remove outdated embeddings after a certain period of time or other lifecycle management strategies.

Common Pitfalls to Avoid

When building a grounded AI copilot, there are several common mistakes that can undermine your implementation:

Chunking documents incorrectly: Chunking too small loses context, while chunking too large reduces retrieval precision. Test different chunk sizes with real queries to find the right balance for your documents.

Not versioning your embeddings: Without proper versioning and archiving, you lose the ability to track how policies and procedures have evolved. Always maintain document hashes and version metadata.

Over-relying on RAG without human review: AI should assist, not replace human judgment. Implement review workflows for critical outputs, especially for compliance-related decisions.

Not testing with real user queries: Build your system around actual questions your users ask, not hypothetical scenarios. Collect and analyze real queries to improve your chunking and retrieval strategies.

Failing to run evaluations after changes: Every time you modify your chunking strategy, update embeddings, or change models, run a comprehensive evaluation suite. Without evals, you won’t catch regressions in quality.

Not testing different models: Different embedding models and LLMs perform differently on your specific use case. Test multiple options and measure their performance on your actual data.

Providing too much context to the LLM: More context isn’t always better. Limit the LLM’s context to what it truly needs for each query. Excess context can dilute relevance and increase latency and costs.

Not making the implementation auditable: For compliance and security work, you need to be able to trace every response back to its sources. Implement logging, citation tracking, and audit trails that show which embeddings and tools were used for each response.

Why This Matters: Reducing Friction

At the end of the day, if it’s more difficult to use your LLM-based tool than it is to retrieve the data manually, then the LLM isn’t providing any value.

That’s why grounding your AI in context is so important. It’s not just about reducing hallucinations; it’s about building tools that users actually want to use. Tools that add value by reducing the time it takes to retrieve data and make connections. Tools that are as frictionless as possible.

The goal is to make it easier and faster to use the tool than to perform these tasks manually. When you eliminate the copy-paste fatigue, keep data current automatically, and put information in the hands of users who might not be able to retrieve it themselves, that’s when AI becomes truly valuable for compliance and security work.

ASCERA: Purpose-Built for Compliance and Security

If you’ve made it this far, thank you! Also, you’re probably wondering: “This sounds great, but it sounds like a lot of work to build.” That’s a fair point.

Building a grounded AI copilot with proper integrations, contextualization, and tool calling capabilities is time-consuming. It requires deep expertise in both the technical implementation and the compliance and security domains.

This is what ASCERA is designed to solve. If you’re a compliance or security professional looking to leverage AI in your work, but you don’t have the time or resources to build this from scratch, you’re not alone.

ASCERA is purpose-built for compliance and security professionals. We’ve already done the heavy lifting of building out integrations with the tools you use, establishing comprehensive knowledge bases of compliance frameworks, and creating the contextualization layers that make AI responses accurate and actionable.

Instead of spending months researching integrations and building RAG pipelines, ASCERA provides you with a solution that already understands your environment. It has context about your compliance requirements, security policies, and operational tools built in.

ASCERA helps organizations not only achieve compliance but maintain continuous compliance through automated processes, real-time control monitoring, and actionable insights. By leveraging ASCERA’s pre-built capabilities, you can focus on what matters: improving your security posture and meeting your compliance objectives.